Section 4.13 : Newton's Method

The next application that we’ll take a look at in this chapter is an important application that is used in many areas. If you’ve been following along in the chapter to this point it’s quite possible that you’ve gotten the impression that many of the applications that we’ve looked at are just made up by us to make you work. This is unfortunate because all of the applications that we’ve looked at to this point are real applications that really are used in real situations. The problem is often that in order to work more meaningful examples of the applications we would need more knowledge than we generally have about the science and/or physics behind the problem. Without that knowledge we’re stuck doing some fairly simplistic examples that often don’t seem very realistic at all and that makes it hard to understand that the application we’re looking at is a real application.

That is going to change in this section. This is an application that we can all understand and we can all understand needs to be done on occasion even if we don’t understand the physics/science behind an actual application.

In this section we are going to look at a method for approximating solutions to equations. We all know that equations need to be solved on occasion and in fact we’ve solved quite a few equations ourselves to this point. In all the examples we’ve looked at to this point we were able to actually find the solutions, but it’s not always possible to do that exactly and/or do the work by hand. That is where this application comes into play. So, let’s see what this application is all about.

Let’s suppose that we want to approximate the solution to \(f\left( x \right) = 0\) and let’s also suppose that we have somehow found an initial approximation to this solution say, \({x_0}\). This initial approximation is probably not all that good, in fact it may be nothing more than a quick guess we made, and so we’d like to find a better approximation. This is easy enough to do. First, we will get the tangent line to\(f\left( x \right)\)at \({x_0}\).

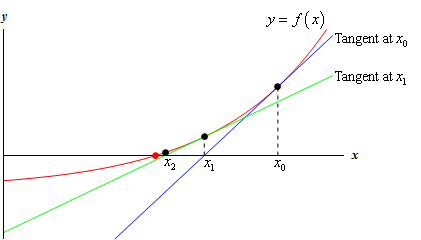

\[y = f\left( {{x_0}} \right) + f'\left( {{x_0}} \right)\left( {x - {x_0}} \right)\]Now, take a look at the graph below.

The blue line (if you’re reading this in color anyway…) is the tangent line at \({x_0}\). We can see that this line will cross the \(x\)-axis much closer to the actual solution to the equation than \({x_0}\) does. Let’s call this point where the tangent at \({x_0}\) crosses the \(x\)-axis \({x_1}\) and we’ll use this point as our new approximation to the solution.

So, how do we find this point? Well we know it’s coordinates, \(\left( {{x_1},0} \right)\), and we know that it’s on the tangent line so plug this point into the tangent line and solve for \({x_1}\) as follows,

\[\begin{align*}0 & = f\left( {{x_0}} \right) + f'\left( {{x_0}} \right)\left( {{x_1} - {x_0}} \right)\\ {x_1} - {x_0} & = - \frac{{f\left( {{x_0}} \right)}}{{f'\left( {{x_0}} \right)}}\\ {x_1} & = {x_0} - \frac{{f\left( {{x_0}} \right)}}{{f'\left( {{x_0}} \right)}}\end{align*}\]So, we can find the new approximation provided the derivative isn’t zero at the original approximation.

Now we repeat the whole process to find an even better approximation. We form up the tangent line to \(f\left( x \right)\)at \({x_1}\) and use its root, which we’ll call \({x_2}\), as a new approximation to the actual solution. If we do this we will arrive at the following formula.

\[{x_2} = {x_1} - \frac{{f\left( {{x_1}} \right)}}{{f'\left( {{x_1}} \right)}}\]This point is also shown on the graph above and we can see from this graph that if we continue following this process will get a sequence of numbers that are getting very close the actual solution. This process is called Newton’s Method.

Here is the general Newton’s Method

Newton’s Method

If \({x_n}\) is an approximation of a solution of\(f\left( x \right) = 0\) and if \(f'\left( {{x_n}} \right) \ne 0\) the next approximation is given by,

\[{x_{n + 1}} = {x_n} - \frac{{f\left( {{x_n}} \right)}}{{f'\left( {{x_n}} \right)}}\]This should lead to the question of when do we stop? How many times do we go through this process? One of the more common stopping points in the process is to continue until two successive approximations agree to a given number of decimal places.

Before working any examples we should address two issues. First, we really do need to be solving \(f\left( x \right) = 0\) in order for Newton’s Method to be applied. This isn’t really all that much of an issue but we do need to make sure that the equation is in this form prior to using the method.

Secondly, we do need to somehow get our hands on an initial approximation to the solution (i.e. we need \({x_0}\) somehow). One of the more common ways of getting our hands on \({x_0}\) is to sketch the graph of the function and use that to get an estimate of the solution which we then use as \({x_0}\). Another common method is if we know that there is a solution to a function in an interval then we can use the midpoint of the interval as \({x_0}\).

Let’s work an example of Newton’s Method.

First note that we weren’t given an initial guess. We were however, given an interval in which to look. We will use this to get our initial guess. As noted above the general rule of thumb in these cases is to take the initial approximation to be the midpoint of the interval. So, we’ll use \({x_0} = 1\) as our initial guess.

Next, recall that we must have the function in the form \(f\left( x \right) = 0\). Therefore, we first rewrite the equation as,

\[\cos x - x = 0\]We can now write down the general formula for Newton’s Method. Doing this will often simplify up the work a little so it’s generally not a bad idea to do this.

\[{x_{n + 1}} = {x_n} - \frac{{\cos x_{n} - x_{n}}}{{ - \sin x_{n} - 1}}\]Let’s now get the first approximation.

\[{x_1} = 1 - \frac{{\cos \left( 1 \right) - 1}}{{ - \sin \left( 1 \right) - 1}} = 0.7503638679\]At this point we should point out that the phrase “six decimal places” does not mean just get \({x_1}\) to six decimal places and then stop. Instead it means that we continue until two successive approximations agree to six decimal places.

Given that stopping condition we clearly need to go at least one step farther.

\[{x_2} = 0.7503638679 - \frac{{\cos \left( 0.7503638679 \right) - 0.7503638679}}{{ - \sin \left( {0.7503638679} \right) - 1}} = 0.7391128909\]Alright, we’re making progress. We’ve got the approximation to 1 decimal place. Let’s do another one, leaving the details of the computation to you.

\[{x_3} = 0.7390851334\]We’ve got it to three decimal places. We’ll need another one.

\[{x_4} = 0.7390851332\]And now we’ve got two approximations that agree to 9 decimal places and so we can stop. We will assume that the solution is approximately \({x_4} = 0.7390851332\).

In this last example we saw that we didn’t have to do too many computations in order for Newton’s Method to give us an approximation in the desired range of accuracy. This will not always be the case. Sometimes it will take many iterations through the process to get to the desired accuracy and on occasion it can fail completely.

The following example is a little silly but it makes the point about the method failing.

Yes, it’s a silly example. Clearly the solution is \(x = 0\), but it does make a very important point. Let’s get the general formula for Newton’s method.

\[{x_{n + 1}} = x_{n} - \frac{{{x_n}^{\frac{1}{3}}}}{{\frac{1}{3}{x_n}^{ - \frac{2}{3}}}} = {x_n} - 3{x_n} = - 2{x_n}\]In fact, we don’t really need to do any computations here. These computations get farther and farther away from the solution, \(x = 0\),with each iteration. Here are a couple of computations to make the point.

\[\begin{align*}{x_1} & = - 2\\ {x_2} & = 4\\ {x_3} & = - 8\\ {x_4} & = 16\\ & etc. \end{align*}\]So, in this case the method fails and fails spectacularly.

So, we need to be a little careful with Newton’s method. It will usually quickly find an approximation to an equation. However, there are times when it will take a lot of work or when it won’t work at all.